The math behind: Implementing Ethics in Autonomous Vehicles

Author: Lau Johansson

11th August 2020

Reading time: 15 min.

This blog post elaborates on how the three ethical theories from my previous blogpost has been mathematically formalized. Before continuing reading I recommend to spend some time understanding this post.

Mathematical formalization

I propose three mathematical formalizations of the ethical theories. Mathematical formalization used in this blog is a process where mathematical logic is used for examining problems in the world around us.

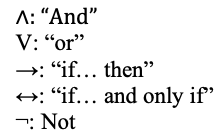

Symbols that are often used are:

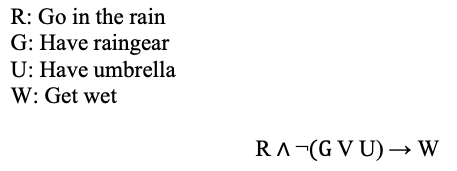

Here’s an example:

“If you go in the rain and don’t have any rain gear or umbrella, you will get wet”

This could be formalized as:

If it does not seem very intuitive for you – you should not worry. I will try to keep the rest of the article in a “human” language and only provide few formulas for the interested reader.

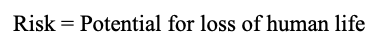

Formalizing risk

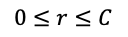

To incorporate risk in the formalizations, some assumptions are made. Two stages of risk are distinguished, where the variable r1, T represents the aggregated risk that decision d causes, at time T. ri, t0 represents the risk before a decision is made, and ri, t1 is the risk after the decision is chosen.

It is assumed, that the risk before and after a decision is made can be compared. The following applies:

Where the values of C represent some kind arbitrary of risk-value.

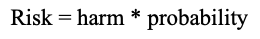

Risk can be calculated as:

The project does not include probability theory and harm. The trolley scenarios are limited to people dying or not. Therefore, risk is formulated as:

The risk framework

In general, I use a kind of “risk framework”. Some of the research papers I have been inspired by can be found in the reference project in the bottom of the post.

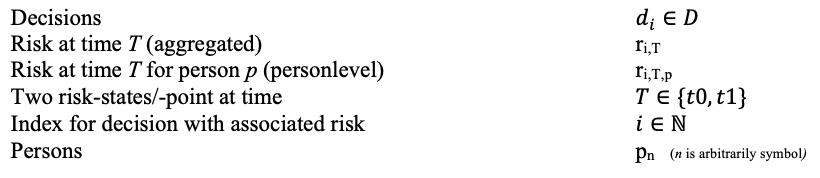

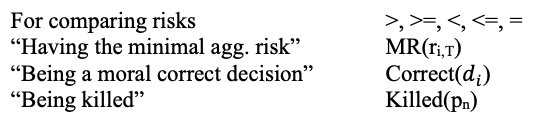

The following variables are used:

D are all possible decisions.

The predicates:

Formalizing utilitarianism

Using the risk-framework, I have formalized utilitarianism in natural language as: “Choose the decision, if the aggregated risk involved is the smallest (minimal) possible in an accident-scenario”.

Formalized mathematically:

This formula can be read as “For all possible decisions in an accident-scenario, the correct decision is the one, where the aggregated risk after the decision has been made, is one, where the risk is as smallest as possible”

Formalizing duty ethics

The phrase “To be treated as a means” must be put in relation to risk. Here two interpretations are investigated (called F1 and F2 from now on):

- F1: You are treated as a means if you are affected by an action

- F2: You are treated as a means if you actively are used to achieving something else

The first interpretation (F1) are formalized (natural) as:

“A person is treated only as a mean if the decision causes the person's risk to increase. Therefore, a decision is morally correct if it affects a person's risk so that the risk is either diminished or unchanged. All involved in a decision must have increased or unchanged risk”

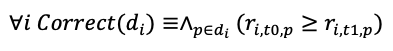

Formalized mathematically (F1):

This formula can be read as “For all possible decisions in an accident-scenario, the correct decision is one, where – for each person - the risk after the decision has been made, is the same or has been decreased”

The second interpretation (F2) are formalized (natural) as:

“A person is treated only as a means if the decision causes the person's risk to increase. Therefore, a decision is morally correct if it affects a person's risk so that the risk is diminished. Moreover, all OTHER involved in a decision must have increased or unchanged risk”

This can more easily be read as “the decision must not make it worse for some people and it must make it better for at least one”

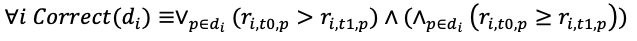

Formalized mathematically (F2):

This formula can be read as “For all possible decisions in an accident-scenario, the correct decision is one, where – for each person - the risk after the decision has been made, is; lowered for one person AND the risk for all other are the same or has been decreased”

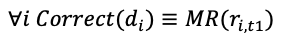

Formalizing rights ethics

According to rights ethics, humans have the right to live. This can also be viewed as humans having the right not to be assaulted. This right (or claim) protects humans against harm. Relating to the risk framework, it could be rephrased viewed as humans have a right not to get their risk affected/changed. The mathematical formalization of rights ethics assumes that the individual has a Hohfeldisk claim that others must not affect the individual's risk.

Formalized naturally: “For each person in a scenario must ensure that the risk before and after a decision is made must be equal with each other, which corresponds to an unchanged risk”

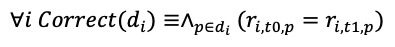

Formalized mathematically:

This formula can be read as “For all possible decisions in an accident-scenario, the correct decision is one, where – for each person - the risk after the decision has been made, is the same as before”

How the theories could work out in real life

This section looks very similar to the previous post. You have already seen one of the scenarios - but I will introduce another one for you.

Scenario 1

An autonomous car drives with a person, person A.

Two persons, B1 and B2, stand in the middle of the road.

The car has two options.

Option 1: The car can drive into person B1 and B2.

Option 2: The car can turn off the road and drive into a pedestrian, person C.

If the car drives into one or more persons, these will be killed.

The moral decision must be made by Person A’s autonomous car.

Scenario 2

An autonomous car drives with a person, person A.

Five persons, B1, B2, B3, B4, and B5 stand in the middle of the road.

They will be killed if the car hits them

Another autonomous vehicle is parked, inside is person C.

The car, where person C sits, can drive out in front of person A’s car. This will save the five persons on the road. But, unfortunately, kill person C.

Option 1: Person C stay parked.

Option 2: Person C drives out in front of Person A.

The moral decision must be made by Person C’s autonomous car.

Moral "correct" decisions of the scenarios

Please keep in mind, that the following conclusions are based on mathematical formalization made in the bachelor-thesis. The conclusions are therefore conditioned by the underlying assumptions.

Decisions can either be:

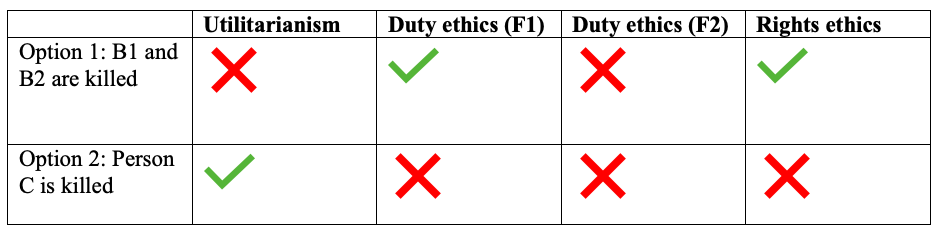

Scenario 1

Hopefully, it is clear for you as a reader, that a utilitarian would prefer to kill person C instead of B1 and B2. This is the exact same conclusion the formalizations make.

Using duty ethics (F1), which reminds the most of Kant's formulation, B1 and B2 already stand in the direction where the car is headed towards. Killing person C will result in using person C only as a means to an end (to save B1 and B2).

None of the involved persons' risks will be decreased by any of the options. Therefore, according to duty ethics (F2), the more strict version, neither of the options is morally correct!

Using the formalized version of rights ethics, it is morally correct to kill B1 and B2. An important assumption is that it is B1’s and B2’s own fault, that they are at risk of getting killed. If this assumption was not made, rights ethics would have concluded the decision as morally incorrect.

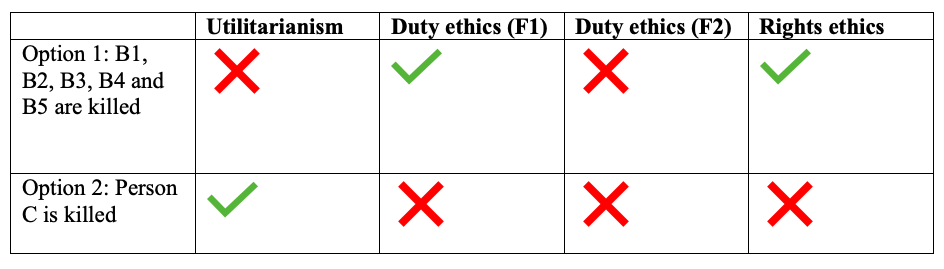

Scenario 2

What is interesting to see is the conclusion of duty ethics (F1). It is morally incorrect to choose to kill Person C to save B1, B2, B3, B4, and B5. This scenario then shows, that according to Kant, it is morally incorrect to use YOURSELF as only a means to an end.

Using the formalized version of rights ethics, it is morally correct to kill B1, B2, B3, B4, and B5. An important assumption is that it is B1, B2, B3, B4, and B5 own fault, that they are at risk of getting killed. If this assumption was not made, rights ethics would have concluded the decision as morally incorrect.

One could question whether it is true, from a rights ethics perspective, that it is morally incorrect to kill person C. If rights ethics assumes that a person is free and independent, then it must be “allowed” to choose to kill itself to save another person. But…. Will this interfere with B1, B2, B3, B4, and B5 rights to stand on the road with the risk of getting killed? Wow… this can get complex to analyze! I will not dig any deeper into this.

Reflections

Math and human language

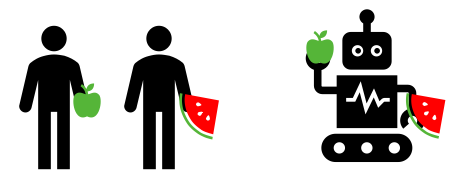

Mathematical is a great tool for translating human language so that computers can understand it. Though, the implementation of formalized ethics is limited by a lot of assumptions. Changing one assumption can change the outcome of a decision completely. Moreover, mathematical logic is often more “strict” than human language. If someone says to you: “You can either take an apple or a piece of watermelon”. I bet you would think you could take either an apple or a piece of watermelon. But, could someone might think that it is OK to take both? A robot would actually think that it is allowed to take both the fruits. These ambiguities in human language is a major challenge when “translating” human language to AVs.

In the context of ethics and AVs, the fruits could represent human lives. The robot could be an AV.

Quantifying risk

Using the term “risk” when implementing ethics in AV’s, it is necessary to explicitly define what risk is related to traffic. In a simple setting risk consist of a combination of harm and the probability of harm. Probability theory will definitely have an important role in the implementation of machine learning algorithms and ethics in AVs. However, this is not a research area I have dived into. Regarding quantification of harm, I have come across a paper, which proposes to use a mathematical collision model combined with multi-criteria decision making (MCDM). Find the paper here.

Thanks for reading the post!

Links

The content of this post is mainly based on the bachelor thesis by Lau Johansson. It is unfortunately only exists in a danish version.